CDN Routing for Multi-CDN: How to Serve Users Faster No Matter Where They Are

Learn how multi-CDN routing works and how smart traffic decisions serve users faster everywhere by choosing the best path per request.

.webp)

You push a big launch. People near your main data center say it feels fast. People far away say the app hangs, video stutters, checkout spins, or the stream drops just as the match gets exciting.

Nothing changed in your code. What changed is the path. Their packets took a longer, more crowded road across the internet.

That road is what CDN routing controls.

When you run a Multi CDN setup, you are deciding, in real time, which network and which edge will serve each request. Done well, that decision makes users feel close to you, no matter where they actually are.

Why A Single CDN Stops Being Enough

A single CDN can be great when:

- Your users are mostly in one region

- Your traffic is not huge

- You can accept some risk

As you grow, the weak spots show up.

Even a huge global CDN has places where it is not strong. Maybe it has few points of presence in one country or poor peering with a local internet provider. A user might be in São Paulo but get served from Miami. That extra distance adds round trip time. You see:

- Slower time to first byte

- More buffering

- Less responsive apps

If that provider has an outage or a bad config, your whole front door is gone. Revenue drops, support tickets explode, and there is nothing you can switch to in the moment.

You also Lose Leverage

With a single vendor, you pay what the contract says. With Multi CDN, you can move traffic and use that as real pressure in price talks.

So you start thinking in a new way. Instead of “which CDN should I pick,” you ask “how should I route between multiple CDNs for speed, uptime, and cost.”

{{promo}}

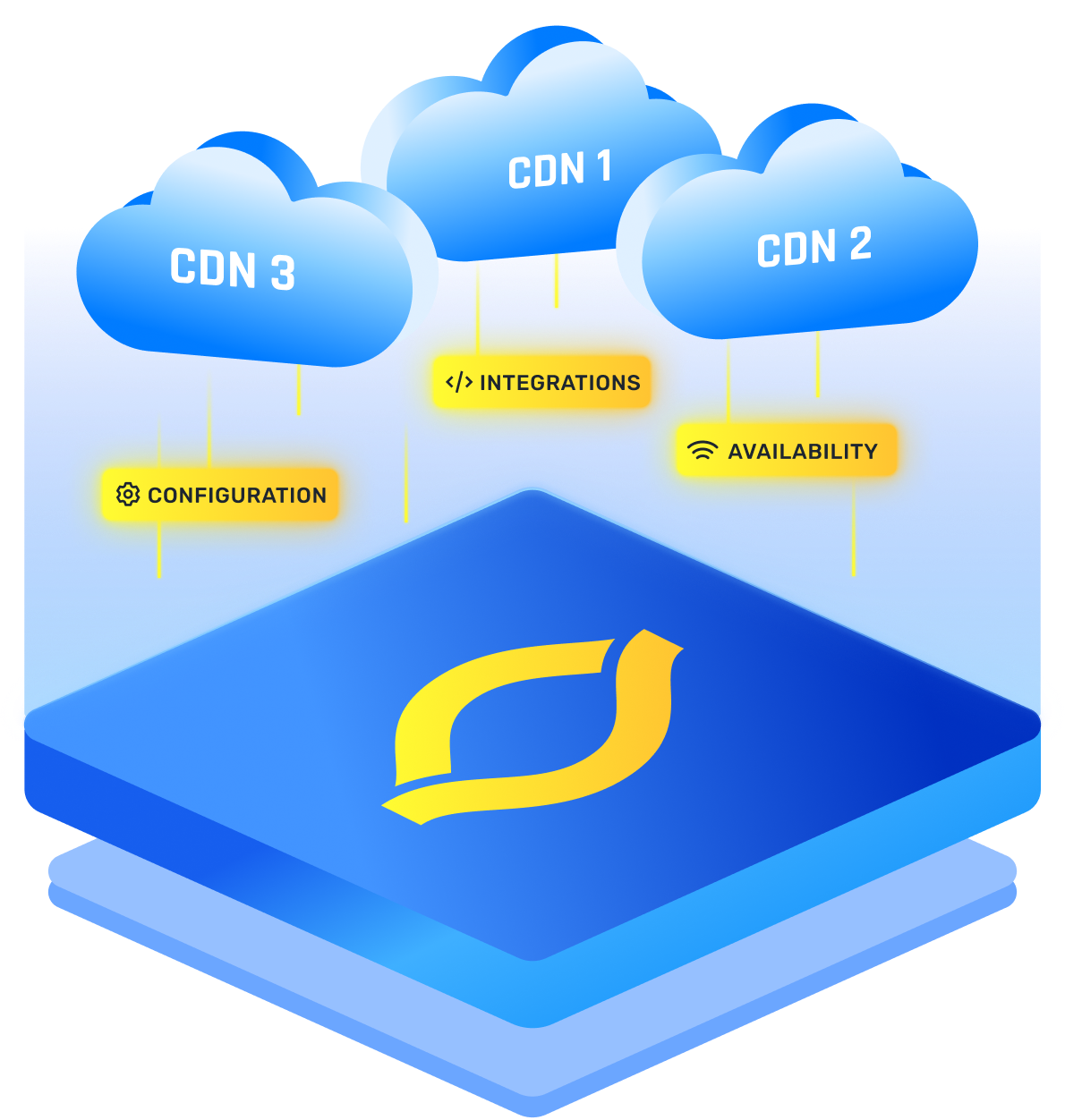

What Multi CDN Looks Like

With Multi CDN, you treat each CDN as one more road you can send traffic down. Together they become a larger virtual network.

A simple mental picture:

- CDN A is strong in North America and Europe

- CDN B is strong in Asia and inside one large country firewall

- CDN C is cheaper in parts of South America and Africa

Your control plane sits in front and decides:

For this user, right now, which road should I use

That decision is the heart of CDN request routing.

Two Common Traffic Patterns

You will usually pick one of these:

- Failover style

- You send almost all traffic to one CDN

- You keep a second CDN warm and ready

- If health checks fail for the first, you switch to the second

- Simple to start with, but you do not get full performance gains, and the backup cache can be cold.

- Always on style

- You spread traffic across two or more CDNs all the time

- Split can be simple, like fifty fifty, or driven by data

- All caches stay warm, and you can shift traffic quickly

- This is more work but gives smoother failover and more control.

Using Origin Shield

On top of either pattern, many teams add an “origin shield” or mid tier:

- All CDNs pull from one shield layer

- The shield pulls from your true origin

- If many edge nodes ask for the same file, the shield fetches it once and serves the rest

This protects your origin from big spikes and lowers egress costs.

How The Path (Multi-CDN Routing) Is Chosen

To answer “how does CDN routing work” in practice, it helps to follow one request from your user.

Imagine this path:

- User types your URL or taps Play

Their device asks the DNS system “where is this domain.”

- Your smart DNS or traffic manager replies

Instead of a fixed answer, it runs rules:

- Where is this user or their network

- Which CDNs are healthy right now

- What performance data do we have

- What are our cost and contract limits today

- Based on this, it returns an answer that points to one CDN, not all.

- The chosen CDN runs its own edge routing CDN logic

Inside that CDN:

- Anycast and its internal routing pick a nearby edge

- That edge checks its cache

- If it has the object, it serves it right away

- If not, it pulls from your origin or from your shield

- Response flows back to the user

If you chose well, the round trip is short, and the experience feels snappy.

This whole decision chain, from DNS answer to edge choice, is what people mean by CDN routing or CDN request routing.

In a Multi CDN setup, your control plane has to steer that chain, not the vendor. An edge routing CDN strategy aims to make this path as short and stable as possible, for every user, all day long.

M-CDN Routing Methods You Will Use

You have a few main tools to control how requests flow between CDNs. Here is a simple view.

Often you mix these. For example:

- DNS picks a CDN based based on health and broad performance data

- Client side logic inside your player tests two CDNs and picks the best for that user for the next set of video segments

The key idea is this: you add layers of control, but each layer should be simple, testable, and observable.

Feeding Routing With Real Data

CDN routing choices are only as good as the data you give them. There are two main data sources you will hear about.

Synthetic Checks

These are robot probes that live in data centers around the world. They:

- Ping your CDNs from clean networks

- Measure latency and availability

- Alert you when a region clearly breaks

They are useful, but they miss many problems. Real users sit behind mobile networks, home routers, and congested links. Probes do not feel that.

Real User Monitoring RUM

RUM measures real people on real networks.

A common flow looks like this:

- You add a small script to your site or app

- The script, very gently, loads tiny files from each CDN in the background

- It measures connection time, time to first byte, and throughput

- It sends these numbers to your control plane

- Your DNS or routing engine uses that data to change weights and choices

Over time you get a live “weather map” of the internet:

- You see that CDN A is slow for one mobile network in one city

- You see that CDN B is strong in another region at night

- You can steer at the level of “users on this ISP in this country” instead of just “users in this country”

This is how modern Multi CDN routing works at scale. The more RUM you have, the smarter your choices.

{{promo}}

Designing Your Own Multi CDN Routing Plan

Let us turn this into something you can actually build. Here is a simple step by step path, with the logic behind each step.

Step 1: Map Your Users And Your Pain

You cannot improve what you do not see.

Do this:

- List your top countries and regions by traffic and revenue

- For each, look at latency, error rate, and conversion or play success

Your goal is to spot patterns like:

- Europe is fine, but Southeast Asia is slow

- Mobile in one large market buffers a lot in the evening

These patterns tell you where better CDN routing will pay off first.

Step 2: Pick A Small Set Of CDNs On Purpose

Start with something realistic:

- One broad, global CDN with strong security features

- One or two regional or price friendly CDNs that shine in target markets

Ask vendors clear questions:

- Where are your strongest POPs

- Which ISPs do you peer well with in my key regions

- Do you support features I care about, like HTTP 3 or WebSockets

You are not just buying capacity. You are buying a footprint and a set of edge abilities.

Step 3: Set Up Basic DNS Based Steering

Now you put a simple control plane in front.

Core actions:

- Use a smart DNS provider or global traffic manager

- Point your main user facing domain to it

- Configure records so that for each region, DNS can choose between your CDNs

Start simple with logic like:

- If country is inside a strict firewall, use CDN that works best there

- Else if region is where CDN B is known strong, prefer CDN B

- Else use CDN A

Use short DNS TTLs, for example thirty or sixty seconds, so you can change your mind fast when things break.

Step 4: Add Health Checks And Gentle Failover

Next, you make sure a dead CDN never gets traffic.

For each CDN:

- Set up HTTP health checks from a few regions

- Check for a specific string in the response, not just a status code

- Mark a CDN as unhealthy only if most probes fail, so one noisy probe does not flip the switch

Then, in your DNS rules:

- If CDN is unhealthy, stop returning it for new queries

- Keep a small logging window so you can see when and why it failed

This gives you automatic failover without wild flapping.

Step 5: Turn On RUM And Performance Based Routing

Once you trust basic health checks, bring in RUM to improve speed.

Practical way to do it:

- Add a RUM script or SDK from a provider you like

- Let it run for at least a week to collect a broad set of data

- Feed that data into your DNS or steering engine

Then define simple rules, for example:

- For each region and ISP, pick the CDN with the lowest recent latency

- Only switch if the new CDN is at least fifteen or twenty percent faster

- Shift traffic gradually, for example move from fifty fifty to sixty forty, then watch

This avoids a classic problem where you send all traffic to the “fastest” CDN at once, overload it, and create a loop of bad routing.

Step 6: Protect Your Origin And Your Wallet

With multiple CDNs, it is easy to triple origin traffic if you are not careful.

You can prevent that with three actions:

- Put an origin shield or mid tier cache in front of your true origin

- Configure all CDNs to fetch from that shield, not directly from origin

- Use cache rules so popular objects stay hot at the shield

On the cost side, watch:

- Commit usage per CDN

- Overage risk as a percentage of commit

- Cost per gigabyte by region

You can then add cost aware rules like:

- Early in the month, favor the CDN where you still have a large commit

- As one CDN nears ninety percent of its commit, start to shed load to the next one

- In very expensive regions, send traffic to the cheaper CDN unless latency crosses a strict limit

This is how Multi CDN routing turns into a lever for FinOps, not just performance.

Step 7: Test Failure Like You Mean It

Finally, you must know that your setup behaves under stress.

Plan regular drills:

- For a small slice of users, pretend one CDN is down by changing DNS or firewall rules

- Watch if traffic flows cleanly to the other CDN

- Check error rates, latency, and caching at the shield

You want your team to be comfortable switching and rolling back, with clear runbooks. Your routing is code now, and code needs tests.

Conclusion

Multi CDN is not just “adding another vendor.” It is learning to control the path between your user and your content.

When you understand how CDN routing works, you stop guessing. You measure real users, you send each request to the best edge you have at that moment, and you keep your origin and your budget safe.

FAQs

What Is CDN Routing In A Multi CDN Setup?

CDN routing is the logic that decides which CDN should serve a specific user request. Instead of sending all traffic to one vendor, your routing layer looks at performance, health, geography, and cost, then chooses the fastest and most stable CDN for that user at that moment. This helps you reduce latency, avoid outages, and improve global performance.

How Does Multi CDN Routing Improve Speed?

Multi CDN routing improves speed by giving users the closest and cleanest network path available. When one CDN has weak peering or high congestion in a region, your routing system shifts traffic to another CDN with better performance there. This reduces time to first byte, lowers buffering, and makes your site or app feel more responsive across different countries and networks.

Do I Need RUM Data To Build A Good Routing Strategy?

You can start without RUM, but RUM makes your routing genuinely smart. Synthetic probes only show clean network behavior from data centers, but RUM measures real users on real mobile and home networks. When you feed RUM into your routing engine, you see performance issues before they become visible to customers, and you can steer traffic at the level of specific ISPs or cities.

How Do I Prevent Route Flapping Between CDNs?

You prevent route flapping by adding thresholds, dampening, and gradual traffic shifts. Instead of switching CDNs the moment one becomes slightly faster, you only switch when the difference is meaningful, for example more than 15 or 20 percent. Then you move traffic slowly to test stability. This keeps caches warm, avoids congestion, and prevents rapid back and forth swings.

Does Multi CDN Raise My Infrastructure Costs?

Not if you manage it well. With commit-aware routing, you can send early-month traffic to the CDN where you have unused commit, then spill to others when you get close to overage. An origin shield also reduces redundant origin pulls across CDNs. When done right, Multi CDN can actually lower total bandwidth cost while raising performance and reliability at the same time.

.webp)