Ever been in the middle of something important online, and suddenly everything slows down? It's like being stuck in traffic when you're already late. Annoying, isn't it? Well, cache prefetching is like having a secret shortcut in these digital traffic jams.

When you're doing your thing on the internet, like watching videos, working, or even just browsing, cache prefetching is quietly working in the background. It's smartly guessing what you'll need next and gets it ready for you.

What is Cache Prefetching?

Cache prefetching is a smart technique used in computing to improve the speed of your internet experience. Let's break it down simply:

- Cache: This is like a storage space in your computer where frequently accessed data is kept for quick access. You can think of it like a pantry in your kitchen, where you keep snacks and ingredients you use often.

- Prefetching: This is the process of predicting and fetching data that you might need soon, even before you actually ask for it.

So, when we combine these two, cache prefetching is like having someone in your kitchen who knows you're about to get hungry for a snack and already has it ready on the counter. In the online world, this means that the websites, videos, or documents you want to open load faster because your computer anticipated your needs and prepared the data in advance.

It's like a time-saving assistant working behind the scenes, making sure that what you need next is already there, waiting for you, leading to a smoother and faster online experience.

{{cool-component}}

Principles of Cache Prefetching

Cache prefetching, an advanced technique in computing, is designed to boost the efficiency of the cache system. Its core principle lies in the proactive fetching of data or instructions, enhancing the cache hit ratio. Let's delve into its principles:

Each organization type has its unique suitability based on specific requirements and system architectures.

Types of Cache Prefetching

Cache prefetching is categorized based on how data is predicted and retrieved before a request is made. Below are the main types of cache prefetching techniques:

1. Instruction Prefetching

- Purpose: Preloads CPU instructions before they are needed.

- Use Case: Used in modern processors to improve execution speed in software applications.

- Example: The CPU prefetches the next few instructions from memory before they are required, reducing stalls in execution pipelines.

2. Data Prefetching

- Purpose: Loads data from memory into the cache before it's requested.

- Use Case: Used in AI, machine learning, and data processing applications.

- Example: A database query engine prefetches records into memory to speed up subsequent searches.

3. Hardware Prefetching

- Purpose: Prefetching is handled by the CPU or memory controller, independent of software.

- Use Case: Built into modern CPUs and GPUs to minimize latency in repetitive data access patterns.

- Example: A graphics processor prefetches texture data before rendering a scene in a video game.

4. Software Prefetching

- Purpose: Prefetching is controlled at the software level, typically within applications.

- Use Case: Used in web browsers, media players, and database systems to optimize performance.

- Example: YouTube prefetches the next few seconds of a video to reduce buffering.

5. Adaptive Prefetching

- Purpose: Uses machine learning to optimize cache prefetching based on usage patterns.

- Use Case: Used in high-performance computing and AI-based systems.

- Example: AI-based predictive caching in Google Search, where results are preloaded based on previous searches.

Cache Prefetching Algorithms & Their Use Cases

Different cache prefetching algorithms optimize performance for specific applications.

Example:

A video streaming service might use Markov Prefetching to predict which video a user is likely to watch next and preload it for a seamless viewing experience.

Advantages of Cache Prefetching

Cache prefetching offers several advantages in computing, each contributing to an overall improvement in system performance and user experience. Let's explore these benefits:

- Reduced Latency: The most significant advantage of cache prefetching is the reduction in data access latency. By fetching data in advance and storing it in the cache, the time taken for data retrieval when it's actually needed is significantly reduced.

- Increased Throughput: Prefetching can increase the throughput of a system. With data readily available in the cache, the processor can execute instructions without waiting for data fetch operations, thus handling more instructions in a given time.

- Improved Bandwidth Utilization: Cache prefetching can make better use of available bandwidth. By fetching data during periods of low bandwidth usage, it reduces the demand during peak periods, leading to more efficient overall bandwidth utilization.

- Enhanced User Experience: For end-users, the benefits translate into a smoother and faster computing experience. This is particularly noticeable in data-intensive tasks such as large database queries, complex scientific computations, or high-definition video streaming.

- Better CPU Utilization: Since the CPU doesn't have to wait as often for data to be fetched from main memory, it can spend more time executing instructions, leading to better CPU utilization and overall system efficiency.

- Reduction in Cache Misses: Cache prefetching directly aims at reducing the number of cache misses — instances where the data needed is not found in the cache. By predicting future data needs and prefetching them into the cache, the miss rate is lowered.

- Adaptability to Different Usage Patterns: Advanced prefetching algorithms can adapt to different usage patterns, making it a versatile solution across various applications and workloads.

Cache Prefetching vs. Cache Caching: What’s the Difference?

Here’s where the main differences lie:

Conclusion

To sum it all up, cache prefetching anticipates our needs and acts beforehand, ensuring that our online interactions, whether for work or leisure, are as smooth and efficient as possible. We've seen how it cleverly predicts and prepares the data we need next, much like a thoughtful friend who knows us well.

FAQs

1. What is the difference between Cache Prefetching and DNS Prefetching?

Cache prefetching loads data into local memory before it's needed, improving application performance. DNS prefetching, on the other hand, resolves domain names before the user clicks a link, reducing webpage loading time.

2. How can businesses protect against DNS Prefetching Security Issues?

DNS prefetching can leak browsing patterns to malicious actors. Businesses should:

- Disable DNS prefetching in sensitive applications.

- Use encrypted DNS (DNS-over-HTTPS or DNS-over-TLS).

- Monitor DNS queries for unusual behavior.

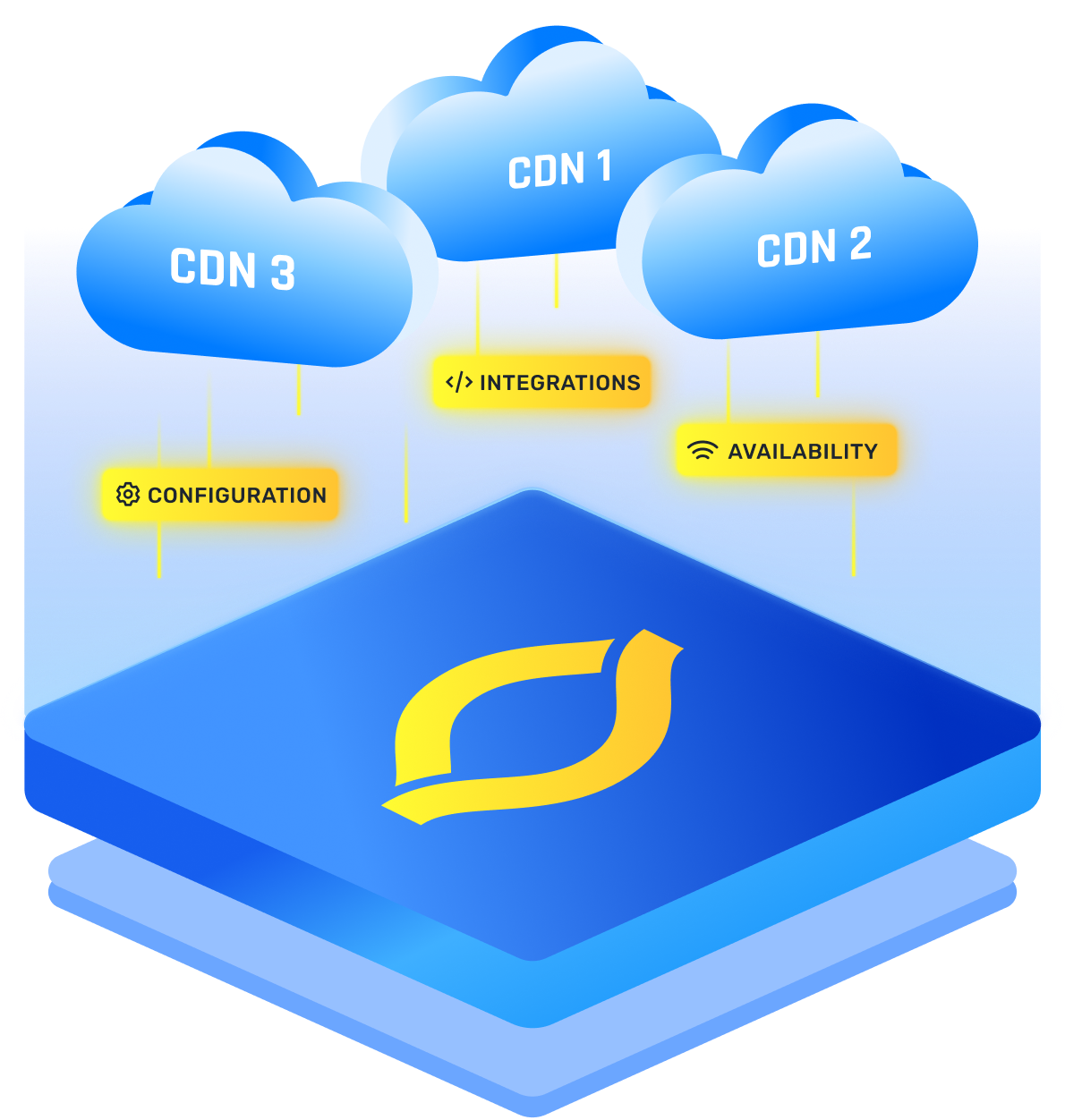

3. How do CDNs use Cache Prefetching for better performance?

Content Delivery Networks (CDNs) use cache prefetching to preload web pages, images, and videos before users request them. This reduces latency and improves load times by ensuring data is available on edge servers closer to the user.

.png)

.png)

.png)