Can a Multi-CDN Setup Help with Latency in Remote Areas?

Table of contents

Yes, a multi-CDN setup can absolutely help reduce latency in remote areas, but it’s not magic. The real benefit comes from having more than one content delivery network to choose from, so requests can be routed through the provider with the closest or fastest-performing edge node at that moment.

If one CDN has a gap in coverage or is underperforming, the other fills in. That flexibility is what gives you a shot at consistently low latency where a single CDN might fail.

Why Latency In Remote Areas Is Such A Problem

When you open a site, the request bounces around a network of cables, routers, and servers until it reaches the closest point of presence (PoP) of your CDN.

In major cities, you’ve got plenty of PoPs nearby. But in remote areas (think rural towns, islands, or regions without dense internet infrastructure) your requests may need to travel hundreds or even thousands of kilometers before hitting an edge server.

That extra distance means higher time to first byte (TTFB), slower responses, and frustrated users. A CDN latency test often shows these spikes clearly: in one region your site loads snappy-fast, but in another it crawls, all because of network geography.

This is where a multi-CDN setup can make a difference.

How Multi-CDN Actually Works For Latency

A single CDN has a fixed map of where its edge servers are. Some providers are excellent in North America and Europe but weaker in Africa or parts of Asia. Others are strong in South America but patchy elsewhere.

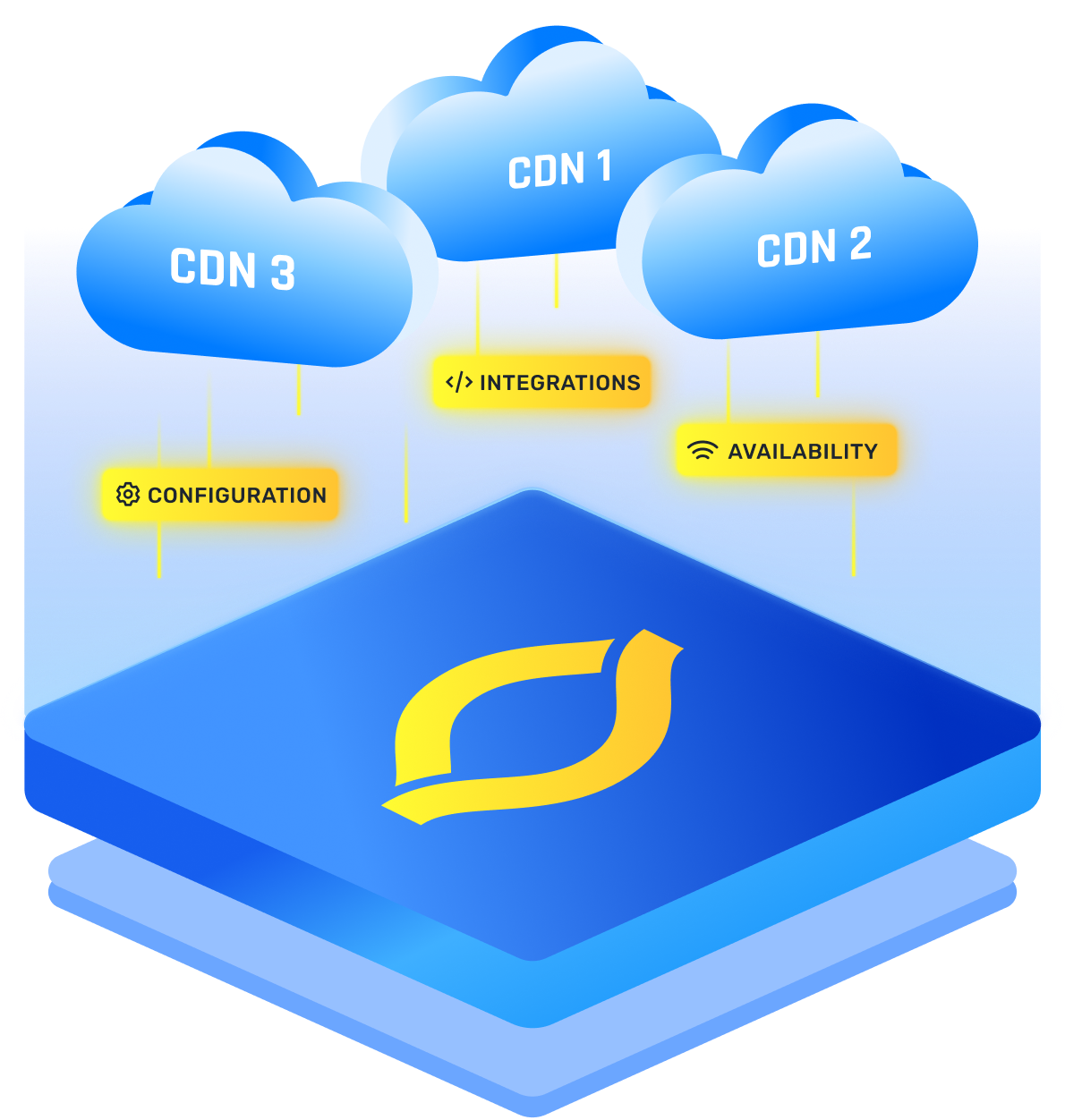

With a multi-CDN setup, you don’t rely on just one map. Instead, you can route traffic through whichever provider has the strongest local presence at the moment.

Here’s a simple way to picture it:

- Single CDN: Like using only one airline. If they don’t fly to a certain airport, tough luck; you’ll be forced into long layovers.

- Multi-CDN: More like having access to all airlines. You pick whichever one gets you closest, fastest.

When implemented correctly, your traffic automatically shifts to whichever CDN offers the lowest latency for the user’s location.

The Role Of Routing And Failover

It’s not enough to just sign contracts with two or three CDNs. The routing layer (the brain of your multi-CDN setup) is what makes the magic happen.

You need smart load balancing that can:

- Measure real-time latency and availability across all CDNs.

- Run CDN latency tests from multiple regions.

- Automatically route a request to the lowest-latency option.

- Failover instantly if one CDN goes down.

Think of this like a GPS app rerouting you around traffic jams. Without that rerouting, having multiple possible roads doesn’t help.

Where Multi-CDN Helps The Most

Multi-CDN shines in regions where coverage is uneven. I’m talking about:

So if your users are clustered in big cities with plenty of CDN PoPs, a single CDN might do fine.

But if you serve audiences in places like rural India, parts of Africa, or small Pacific nations, a multi-CDN setup can improve latency.

Testing Multi-CDN Performance

Before you can claim victory, you’ll want to measure whether the setup is truly improving latency. A CDN latency test will usually give you three main insights:

- Baseline latency per region with a single CDN.

- Latency distribution when routing through multiple CDNs.

- Percentage of requests routed to each provider.

When I’ve run comparisons, the data usually shows clear patterns: some CDNs dominate in certain regions, while others are weaker. By combining them, you get consistently lower peaks in latency.

If you think of latency as a heartbeat graph, a single CDN has dramatic spikes in weaker areas. Multi-CDN smooths those spikes into something closer to a steady pulse.

Where It Won’t Help

It’s tempting to think multi-CDN solves every problem, but there are limits.

- Local ISP bottlenecks: If the user’s internet provider is slow, no CDN can fix that.

- Undersea cable outages: Sometimes all providers use the same cable; if that’s cut, everyone suffers.

- Application bottlenecks: A multi-CDN setup won’t save you if your backend server is slow.

In short: multi-CDN is a tool for optimizing edge delivery, not a cure-all for every kind of latency.

How You Might Decide

If you’re unsure whether a multi-CDN setup will really improve latency for your users, here’s a straightforward approach:

- Run a CDN latency test for your current provider.

- Use multiple regions, especially remote ones where you know latency is higher.

- Compare against public data from other CDNs.

- Most providers publish performance stats, or you can use third-party tools like Cedexis or Catchpoint.

- Identify weak spots.

- Are certain regions consistently worse? If so, check whether another CDN performs better there.

- Pilot a multi-CDN setup.

- Start by routing only a fraction of traffic. Measure whether latency improves.

- Decide on rollout.

- If the gains are significant in your target markets, scale it up.

Where to Keep Your Expectation With Multi-CDN in Remote Regions

A multi-CDN setup won’t make every single request instant. What it does is improve the average experience across diverse geographies and reduce the number of users stuck with frustratingly high latency.

In other words, you move from “some users get a great experience while others suffer” to “most users get a consistently good experience.”

That shift matters. If you’re running a streaming service, it can be the difference between keeping or losing customers in remote regions. If you’re running a SaaS platform, it can prevent latency complaints from your international clients.

And if we’re talking in-theory, you’ll notice the biggest difference not in your fastest markets, but in the ones where performance used to be the weakest.

.png)

.png)

.png)