When you're moving through the fast-paced world of the internet, you've probably never stopped to ponder the speed of light or how quickly electrical signals can pass through cables. Yet, these seemingly abstract ideas are foundational to your online experience.

Welcome to the complex universe of Round Trip Time (RTT), a metric that plays an integral role in shaping your digital interactions. This article is your deep dive into understanding what RTT is, its impact on performance, and the nitty-gritty of calculating it.

What is Round Trip Time?

RTT measures the time it takes for a data packet to leave its starting point, reach its destination, and return. This round-trip adventure is measured in milliseconds (ms), providing crucial insights into network latency and overall performance. A data packet is a piece of bigger data purposely cut into smaller workable sections.

But why should you care? Well, consider RTT as the heartbeat of network communications. It affects everything from page loading times to the quality of video calls. If you're into gaming, a high RTT can be the difference between life and death for your in-game character.

In e-commerce, poor RTT can lead to page timeouts, affecting user experience and, consequently sales. This isn't some concept locked in the box of network engineering; it's the lifeblood of all your online activities.

RTT vs TTFB – What’s the Difference?

While round trip time (RTT) refers to the full time a packet takes to go to the server and back, Time to First Byte (TTFB) is just the time taken for the browser to receive the first byte of data after making a request.

TTFB is part of RTT, but also includes server processing time. If your RTT is low but TTFB is high, your server may be slow to respond even though your connection is fast.

{{cool-component}}

How to Calculate RTT?

If you're expecting some magic that requires a Ph.D. in Computer Science to understand, you're in for a pleasant surprise. The math here is straightforward but involves a few variables you'll need to grasp.

The essence of RTT calculation lies in two key aspects: the server side and the client side. Each has its own 'mini' RTT, which needs to be averaged out for a complete picture.

Let's break it down:

- Average RTT: This is calculated by taking the mean of various RTTs. Mathematically, it can be denoted as:

Average RTT=(RTTs1+RTTs2)/2

To put this in real-world terms, imagine you're an archer. You shoot arrows at two targets, one closer and one farther away. The average time it takes for arrows to hit these targets and return represents the server and client RTTs.

Combine these, and you get a holistic understanding of your archery setup's efficiency, much like how combining server and client RTTs gives you the network's total RTT.

However, this is a simplification. In reality, multiple samples are taken to account for variances in latency, jitter, and packet loss. A more advanced approach involves statistical techniques to remove outliers and arrive at a more representative value. Don't be fooled into thinking it's just a matter of adding two numbers; this is network science, after all.

RTT Journey

Client --RTTc1--> Server --RTTs1--> Client --RTTc2--> Server --RTTs2--> Client

- RTTc1 represents the time taken for the first packet to travel from the client to the server.

- RTTs1 is the time it takes for the server to respond to that packet and for the response to reach the client.

- RTTc2 and RTTs2 represent the same journey for a second packet.

The RTT for each packet is the sum of its client-side trip and server-side trip.

When measuring RTT, multiple packets are often sent to get a more accurate average, accounting for potential changes in network conditions. The more packets you send, the more comprehensive your understanding of the network's performance will be.

Measuring Round Trip Time with Ping

One of the simplest and most commonly used methods to measure round trip time (RTT) is by using the ping command. It sends a small data packet to a specified IP or domain and measures the time taken for the server to respond.

Example:

ping google.com

You'll see output like:

Reply from 142.250.68.14: bytes=32 time=21ms TTL=55

In this case, the time=21ms indicates the RTT. Keep in mind, actual application RTT may vary slightly due to additional processing on the server or network conditions.

Using ping helps network administrators get a quick snapshot of network latency and potential issues.

Factors Affecting RTT

When we talk about RTT, it's not as simple as A to B and back to A. The path between these two points is a mix of variables, each contributing its own share of delay and inconsistencies.

Think of it like a treasure hunt where each leg of the journey poses different challenges. Let's dissect these factors.

Physical Distance

The farther the server is from the user, the longer the data packets take to travel back and forth. Imagine sending a messenger on horseback from New York to San Francisco versus New York to Tokyo.

The distance alone can make a notable difference in RTT.

Origin Server Response Time

Measured in Time to First Byte (TTFB), this is essentially how quickly an origin server can process a request and start sending data back.

If a server is bogged down with too many requests, say during a DDoS attack, the RTT skyrockets.

Transmission Medium

Whether it's optical fiber, copper cables, or wireless, the medium through which the data travels affects the speed.

Think of it like travelling on a highway, a dirt road, or a congested city street. Each provides a different speed and quality of travel.

Specifically, optical fiber cables offer superior data transmission rates due to their ability to carry light signals, minimizing latency. On the other hand, copper cables are susceptible to electromagnetic interference, which can add to the RTT.

Local Area Network (LAN) Traffic

Heavy local network traffic can bottleneck the data before it even reaches the broader internet.

Imagine trying to leave your neighborhood when everyone else is also rushing out; you get stuck before you can even hit the open road.

Node Count and Congestion

The data doesn't travel in a straight line but hops through various nodes (routers, switches, etc.). Each hop adds a small delay, and if any of these nodes are congested, the delay compounds.

Visualize it as taking a flight with multiple layovers; each layover adds to your total travel time.

Strategies for RTT Optimization

Optimizing RTT is not unlike tuning a musical instrument; each string (or factor) requires individual attention for the instrument to produce optimal sound. Here are some strategies to fine-tune your RTT.

Geographical Load Balancing

By distributing incoming network traffic across multiple servers located in different geographical locations, you can significantly lower the physical distance data has to travel, thereby reducing RTT.

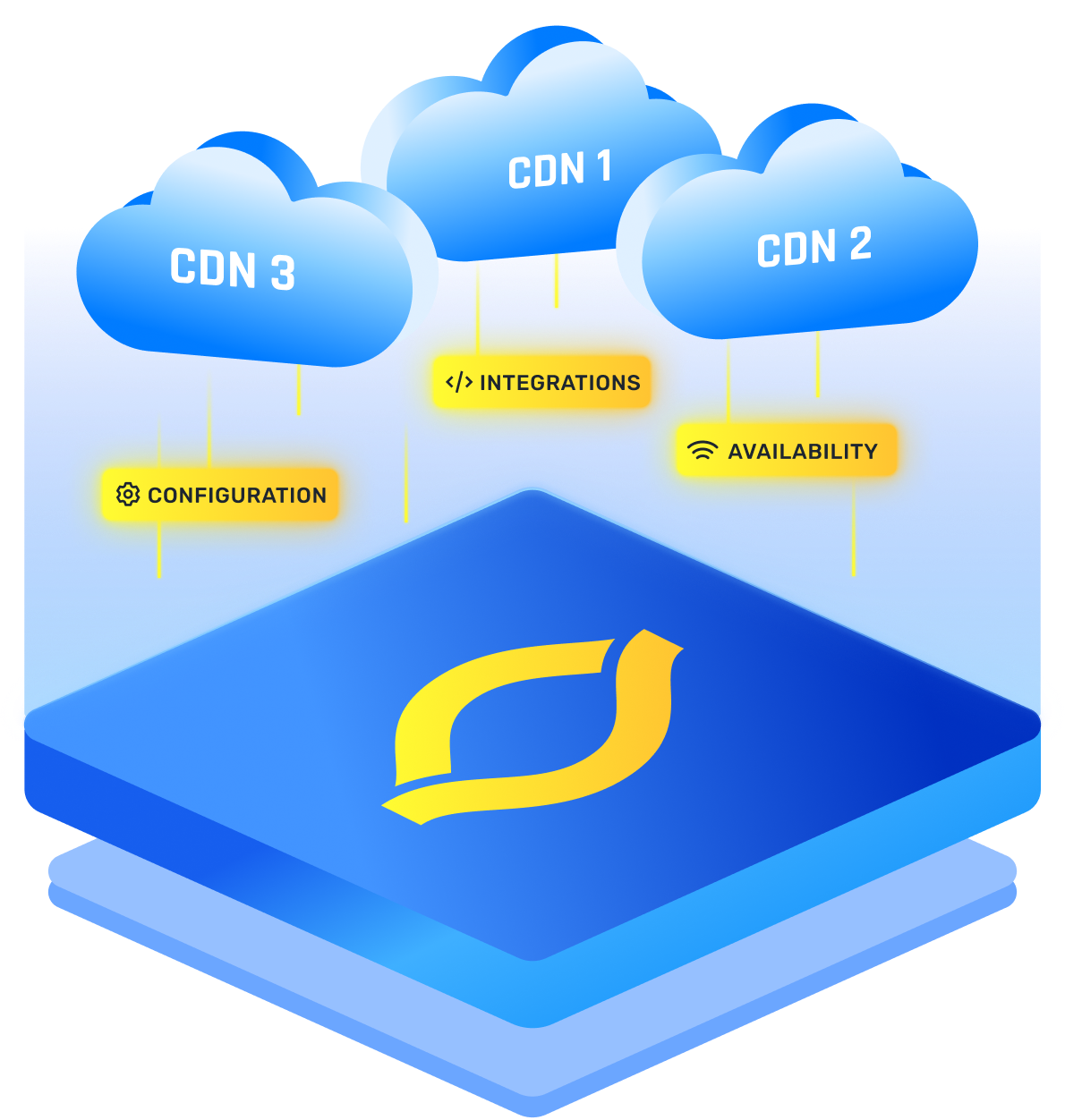

CDN Utilization

Content Delivery Networks (CDNs) store cached versions of your website's content in multiple geographical locations.

This way, a user's requests are directed to the nearest server, reducing RTT substantially.

Additionally, CDNs maintain an open network with the server side, further shortening the RTT.

By bringing content closer to the edge from the server side, CDNs ensure that the client doesn't have to travel all the way to the origin, making content delivery faster and more efficient.

{{cool-component}}

Optimize Server Performance

A server that can quickly process and respond to requests contributes to a lower RTT. Efficient code, faster algorithms, and better hardware can all contribute to this.

Traffic Shaping

Traffic shaping, often referred to as "packet shaping" or "bandwidth management," is a networking technique that involves controlling the volume and speed of data transmission to optimize or guarantee specific levels of performance, latency, and bandwidth availability.

The primary objective of traffic shaping is to ensure that the network serves critical or prioritized applications or services better than those of lower priority.

One of the main ways this is accomplished is by prioritizing certain types of data packets over others. The type of data and its importance to the primary function of a network or service determines its priority. For example, in the context of a video conferencing service, video and audio data must be given priority.

This is because these types of data are time-sensitive; delays in transmission can severely impact the quality of the call and user experience.

When you give a higher priority to video and audio data over other types of data, such as text messages or files being sent during the conference, the system ensures that users experience smoother video and clearer audio during their meetings.

Packet Size Adjustment

A packet is a unit of data sent over a network. It contains both the actual data and metadata, such as source and destination addresses.

Smaller packet sizes are quicker to process but may require more trips to transfer a given amount of data.

Larger packet sizes are the opposite. Knowing the right packet size for your specific needs can help optimize RTT.

Conclusion

In essence, Round Trip Time (RTT) serves as the pulse of your network's health, affecting everything from webpage loading times to the quality of real-time video streaming.

Just as a conductor needs to understand every instrument in the orchestra to create a symphony, you need a deep understanding of RTT to orchestrate a seamless online experience.

FAQs

Q: Why is Round Trip Time important in networking?

Round Trip Time (RTT) is a critical performance metric that helps identify delays between sending a request and receiving a response. In RTT networking, it reveals how fast data travels across your network and is used to optimize latency, improve application performance, and diagnose slow connections.

Q: What is the difference between Round Trip Time and Latency?

Latency generally refers to the one-way delay between a request and its destination, while RTT is the full journey, from sender to receiver and back. So, RTT = 2 × latency (in ideal conditions). Both are important for evaluating network performance, but RTT offers a more complete picture.

Q: What is a good Round Trip Time for online services?

A good RTT for online services varies by application, but in general:

- <20ms is excellent (ideal for gaming, VoIP)

- 20–100ms is good (for browsing, streaming)

- 150ms may cause noticeable delays

Lower RTTs ensure smoother interactions, especially for real-time services like video conferencing or multiplayer games.

.png)

.png)

.png)