Does the CDN Support Serving Stale Content?

Table of contents

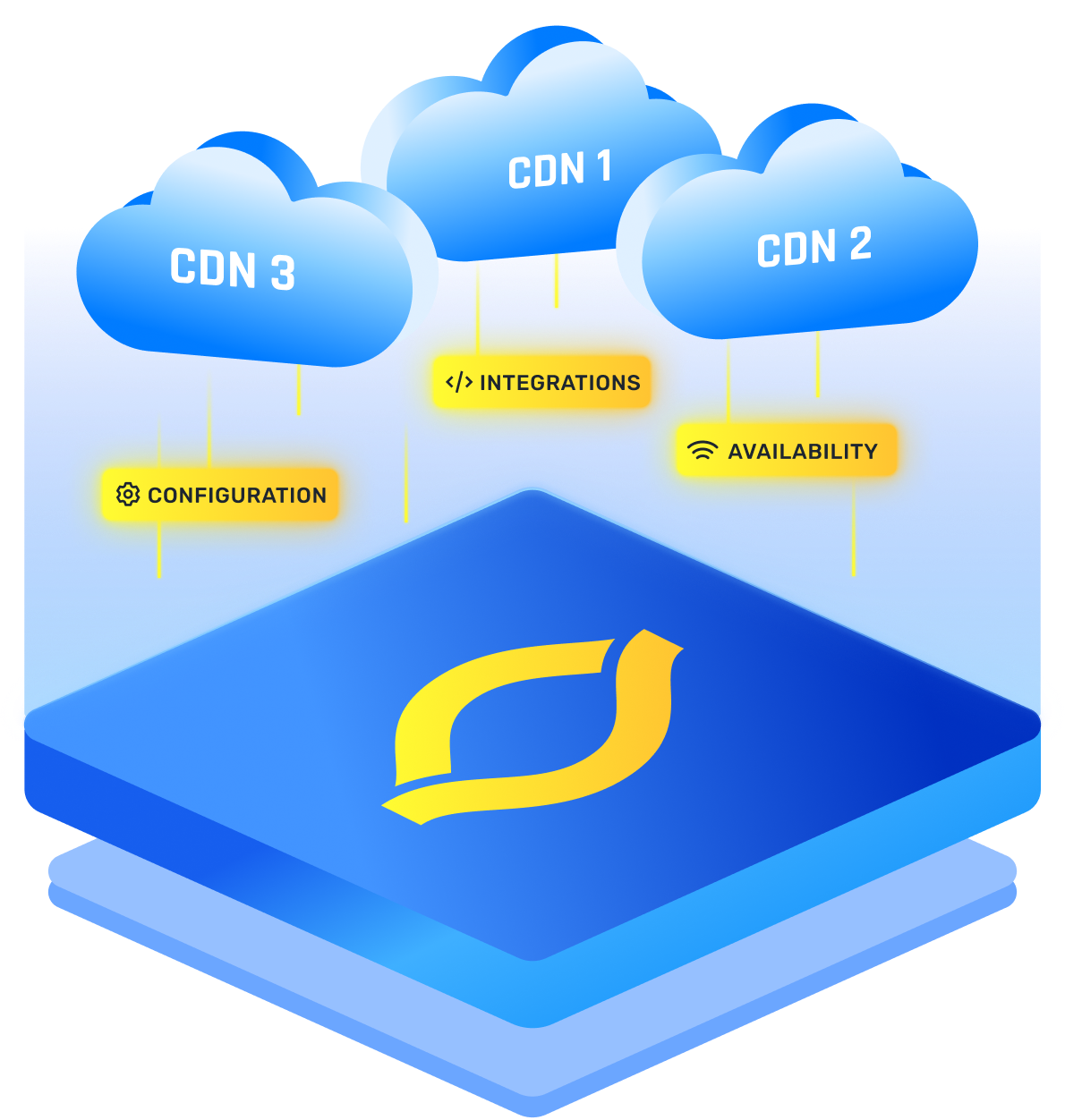

Yes, a CDN can serve stale content when configured to do so, often through features like stale-while-revalidate or stale-if-error. This allows the CDN to keep serving content from its cache even if the origin server is down or slow to respond, giving the end-user a smoother experience.

Stale Content

Imagine you're trying to deliver a critical web service during peak traffic, and the last thing you want is downtime. If the origin server crashes, or there's an issue during content retrieval, the CDN doesn't need to just sit there waiting or serve an error message. It can grab that stale content out of its cache and keep things running for the user on the other end.

I’ve always seen the "stale content" feature as more of a fallback mechanism. You can configure the CDN to serve stale cache data for a specified amount of time while it attempts to retrieve the most up-to-date version from the origin. In practice, this keeps the experience smooth for users, which is what you and I care about most.

Utilizing stale content helps maintain a high cache hit ratio, with some CDNs reporting cache hit ratios of over 90% during peak times, meaning the CDN serves content from cache 90% of the time.

{{cool-component}}

Key Configurations to Enable Stale Content Delivery

Here’s where the technical part comes in. CDNs have a couple of options to serve stale content, typically using headers like stale-while-revalidate or stale-if-error.

- stale-while-revalidate: This directive allows the CDN to serve the stale cache while it simultaneously fetches a fresh version from the origin. The cool part is that the user doesn’t experience any delays because they’re getting cached content. Meanwhile, the CDN updates its cache in the background.

- stale-if-error: Now, this one is particularly useful for dealing with failures. If something goes wrong during the retrieval from the origin—whether it’s downtime, a slow response, or a timeout—the CDN will serve stale content as a fallback. This ensures that the user doesn’t see a failure message or experience any interruption.

When Would You Use This?

From my experience, you’ll want to consider enabling stale content delivery for use cases where availability is more important than immediate freshness. Think about situations like:

- News websites: A brief period of stale content isn’t going to harm anyone, especially if the alternative is downtime.

- E-commerce platforms: If an origin server is down and you’re serving product pages, it’s better for the user to see slightly outdated content than a broken page or error.

- APIs: I’ve set up CDN caching for APIs where it’s essential to avoid disruption. Even if the data is a few minutes out of date, it’s better than no data at all.

In high-traffic environments like news sites or e-commerce platforms, caching (and serving stale content when necessary) has been shown to reduce origin server load by up to 80%. This reduction ensures consistent delivery even under heavy user demand.

A CDN’s Flexibility with Cache

With most CDN providers, you get a lot of flexibility when it comes to cache management. I typically configure these options depending on how critical content freshness is for the client. When freshness isn't mission-critical, and the key priority is availability or avoiding downtime, I lean toward allowing stale content.

Here’s an example of how this might work in practice: You’ve got a site with a heavy backend API that can sometimes get overwhelmed by requests.

If you’ve set up your CDN with stale-while-revalidate or stale-if-error, even when the backend API goes down or has a delay, users won’t even notice. The CDN continues to serve that cached (now "stale") response while it’s working on fetching the fresh version.

Pros and Cons

Configuring Your CDN for Stale Content

Most CDN providers, like Cloudflare, Fastly, and Akamai, have support for stale content built right in. It’s just a matter of configuring your cache control headers appropriately. If you’re working with Cloudflare, for example, you can manage these settings directly in the dashboard or through your API configuration.

Here’s how I’d typically handle it:

- TTL settings: This controls how long the CDN keeps content before considering it stale. When configuring, I think about how critical fresh content is. For static resources (e.g., images, CSS), a longer TTL is fine. But for dynamic content, I keep it short.

- Stale-while-revalidate: I might configure this for content where real-time freshness isn’t essential, but availability is. Think product pages or blog articles.

- Stale-if-error: I always have this configured for mission-critical applications. Better to serve stale content than fail altogether.

{{cool-component}}

When You Wouldn’t Want to Serve Stale Content

Of course, there are times when serving stale content isn’t appropriate. If you're running an application where real-time updates are essential—think stock market data or live sports scores—you probably want to avoid serving anything that's out of date, even for a short period.

In those scenarios, I’d focus more on ensuring the origin infrastructure is robust enough to handle the load and prioritize lower TTLs on CDN caches to make sure data is always fresh.

In my experience, whether or not you should serve stale content depends on your specific needs. For most applications, especially those that prioritize availability over real-time data, serving stale content through a CDN is a solid way to ensure that users continue to have a seamless experience, even if the origin servers hit a snag.

.png)

.png)

.png)